Artificial intelligence in radiation oncology treatment planning: a brief overview

Introduction

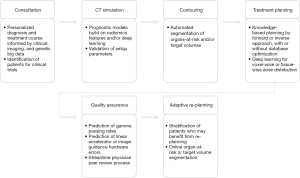

Cancer care delivery generally, and radiotherapy specifically, is highly dependent on integrating data from multiple sources. The detail provided by a single datum can radically change a cancer patient’s management strategy; for example, tumor genetic constitution (1), immunohistologic markers (2), a patient’s demographic profile (3), or radiographic attributes (4) can each radically alter a patient’s treatment course and/or prognosis. Radiation oncology embraces this complex treatment calculus and has long sought to deliver proven and precise radiotherapy courses (5,6). The data-diverse, technology-intensive nature of radiation oncology places it in a unique position among medical specialties to be revolutionized by the “fourth industrial revolution,” artificial intelligence (AI) (7,8). The goal of this review is to give a brief overview on the role of AI in radiation treatment planning and related applications. Figure 1 organizes steps in the radiotherapy treatment process and provides example AI use cases for each of them, and augments similar extant literature on this topic, towards which the interested reader is heartily referred (8-13).

Terminology of AI, machine learning, and deep learning

At base, it is initially of utility to clarify the oft-conflated terms “artificial intelligence,” “machine learning (ML)”, and “deep learning (DL)”. AI is the practice and theory of developing “machines that can think and act as intelligently as humans” (14). Early AI pioneers primarily built it by programming logic rules into machines; however, rule-based AI has largely been subsumed by ML AI, especially in healthcare (15,16). Rather than manually programmed logic rules, ML relies on manually curated data inputs to “learn” patterns, often patterns that humans cannot discern and therefore cannot explicitly program as a logic rule. ML methods are based on advanced statistics and mathematics [a prominent ML book refers to it as statistical learning (17)] and there are many algorithms, each with strengths and limitations depending on the types and dimensionality of the input data. In turn, DL is a unique kind of ML that has yielded spectacular solutions to AI problems. DL architectures are inspired by the brain and facilitated by recent advances in computing speed and power and immense data stores for training the neural network architectures (16). DL differs from other ML approaches in that it can learn from “raw” data inputs rather than manually curated ones. The current hype surrounding AI is largely catalyzed by DL, which exploded into the public attention in 2012 (18). For further discussion of DL we refer the reader to reviews on the topic and to two JAMA viewpoints regarding its place in healthcare (15,16,19-22).

Auto segmentation

Manual segmentation (contouring) of target and normal structures is one of the most time-consuming aspects of radiation therapy and subject to significant intra and inter-observer variability. Therefore, autosegmentation algorithms have been developed to increase staff efficiency and improve consistency. Historically, the most commonly employed method for structure autosegmentation has been propagation of contours from an “atlas” or library of previously segmented images onto a new image by deformable registration (23,24). However, the accuracy of atlas-based methods suffers significantly whenever it encounters patients with unusual anatomy compared to their reference atlases (25), or by any contributor to tissue contrast variation. To circumvent this, more images can be added to the atlas to make it more robust, but additional images increase the computational load and decrease the method’s speed.

DL, which to date has achieved broad success in image analysis and computer vision, appears to resolve the time and computation constraints inherent in traditional atlas-based methods. DL algorithms have been trained to segment cancer and organ-at-risk (OAR) structures in the head and neck (26-31), brain (32-34), abdomen (35,36), thorax (37-42), spinal cord (43), breast (44-46), and pelvis (47-50) at accuracies indistinguishable from human experts and with clinical workflow implementation and validation in some cases. Several studies have concluded that DL is more accurate than other algorithms with respect to the Dice Similarity Coefficient (DSC) (28,37,47,51,52), a standard volume-overlap evaluation metric used in segmentation literature (53). Segmentation methods based in deep learning are extraordinarily computationally demanding to instantiate, but once the model’s hyperparameters and tensor weights are optimized, running it is computationally trivial and faster than atlas-based methods (26,54). For example, in the setting of lung cancer treatment Zhu et al. (55) directly compared DL and atlas-based OAR segmentations and found DL to output non-inferior or superior results for every OAR at faster intervals. This time advantage may be particularly significant in the setting of adapting the radiation plan while the patient is on the treatment table.

Nikolov et al. (27) is an innovative illustration of rapid, accurate DL-based target and OAR segmentation. The authors trained a 3D U-Net architecture end-to-end with 663 CT datasets acquired from their home institution and tested it independently on 24 multi-institutional datasets available from The Cancer Imaging Archive. In a novel step, the authors departed from the DSC evaluation metric in favor of an altered, “surface” DSC that more effectively penalizes segmentations that would require extra time to manually re-contour because of their surface area. The model segmented 19 of 21 OARs as well as expert English radiographers. The two exception OARs were the lens and brainstem. Lens segmentation has also been a challenge in other published work, but the performance of the algorithm on segmenting brainstem was explained by discordance in ground-truth segmentation labels regarding the definition of where the brainstem begins, leading to poor model segmentation at the brain-brainstem interface. This limitation notwithstanding, the model has potential to markedly hasten workflow with no compromise in segmentation quality.

Treatment planning and optimization

Our literature search suggests that radiotherapy treatment planning and optimization may be the single most popular ML/DL application in radiotherapy currently, suggesting physicists’ faith in its potential for improving this task. In 19 of 45 Medline search results addressing radiation oncology and AI from January 2017 through January 2019, automated radiotherapy plan creation and optimization is the primary or secondary aim.

Radiotherapy plan optimization attempts to find the most satisfying solution to competing objectives: deliver the highest radiation dose to the target while delivering the least radiation to surrounding OARs, which are usually assigned a numeric weight to quantify their importance in the optimization calculus. This task requires physicists to iteratively fine-tune parameters that determine radiation dose deposition (usually modeled by Dose-volume histograms or DVHs) until a plan that meets the minimum acceptable threshold for each objective is generated. However, there is no guarantee that the first clinically acceptable plan is the most optimal one, and continued fine-tuning can continue indefinitely until time resources are exhausted and the planner is forced to settle on the best plan he or she could achieve.

Knowledge-based automated planning (KBP) methods have generated considerable attention in recent literature. KBP assists physicians and planners in obtaining the optimal OAR DVHs by employing ML methods that learn from databases of clinically acceptable plans. KBP uses geometric and dosimetric features from plans in the treatment library to predict a range of achievable DVHs for new patients. Obtaining this information early in the treatment process can assist in achieving the optimal treatment plan by forward planning. Alternatively, KBP model dose predictions can be used to directly generate plans by inverse optimization (56). KBP has been used to predict normal tissue DVHs in a variety of disease sites (57-61) and recently to predict which patients may benefit from proton radiation (62).

KBP methods have been commercialized in treatment planning systems (63-68). For example, RapidPlan™ (Varian Medical Systems, Palo Alto, CA) trains on past clinically acceptable plan DICOM files with beam geometry and the structure set on which the planner wishes to create objectives (per-structure minimum of 20 examples) to output an automatic plan with predicted DVHs for all objective OARs. RapidPlan™ can generate plans comparable to expert-generated plans (64,65) and superior to beginner and junior-generated plans (66). The RapidPlan™ ML algorithm details are proprietary but are inspired by Yuan et al. (69), who used stepwise multiple regression to learn anatomic and spatial image features that are germane to radiotherapy planning and then used principal component analysis to determine which of those features accounted for the greatest variance in OAR dose deposition.

As is the case for other applications of knowledge database-based automation, the cost of computation increases and speed decreases with increasing database size. The problem of computational inefficiency with large data inputs was intriguingly evaded by Liu et al. (70), who greatly downsampled the number of planning optimization ML algorithm inputs by first grouping voxels that were isodosimetric and spatially related using a K means clustering algorithm. Computational efficiency was markedly increased without sacrificing plan quality.

In aggregate, the literature to date strongly supports the feasibility of KBP automation and outlines possible next steps. Some have suggested that automation methods be trained with true patient outcome data rather than DVH tissue damage proxies (11). Wall et al. (71) hypothesized that if KBP knowledge databases for prostate cancer planning consisted of Pareto-optimized plans rather than merely clinically passable plans, the quality of the DVH predictions would significantly improve. A plan is Pareto-optimized if its competing objectives have been set such that an attempt to improve one objective compromises a hard stop for any other objective. Pareto-trained KBP generated plans showed significant improvement compared to plans generated by KBP alone. When past plans were re-planned using the Pareto-trained KBP the average decreases in dose to the rectum and bladder were respectively 9.4 and 7.8 Gy, while maintaining target dose.

In addition to KBP, DL has been investigated as a mechanism for automated plan generation (72-75). Fan et al. (73) and Chen et al. (74) independently undertook the similar task to predict dose distribution with a ResNet DL architecture. Although they trained their models on input data of different types and quantities, both groups demonstrated feasible DL-based automated plans that were similar to expert plans. Cardenas et al. trained a DL auto-encoder to delineate CTV contours that needed little or no manual correction (72). To capture CTV information, they used computed distances between tumor volumes and surrounding OARs as inputs rather than images. This work contributed the first automated clinically usable CTVs and a novel probability threshold function based on the DSC.

To our knowledge, no study has yet compared plan quality between DL and KBP or other automated methods.

Tumor control probability and normal tissue complication probability

Radiomics is an emerging field that extracts textural, morphologic, and intensity quantitative features from images when may then be used as feature inputs to ML algorithms. Radiomics features are a promising additional data type for oncologic outcome prediction and tumor control probability models (76). Multiple manuscripts have been published using radiomics to predict radiation response, in some cases with prediction power outperforming standard clinical variables (77-82), though not in all (83). Radiomics-based statistical approaches can predict various radiation normal tissue complication probabilities including radiation pneumonitis, xerostomia, and rectal wall toxicity (84-89). Radiomics data, coupled with genomic data and increasingly computable clinical record data, may escort radiation oncology into a new epoch of truly personalized radiation plans based on patient-specific knowledge.

However, important challenges surrounding standardization and reproducibility of radiomics-based predictors exist. For example, radiomics studies suffer from lack of standardization at multiple stages of image acquisition and processing. There is currently no way to reliably compare between MRI radiomics studies, because variations exist among all of them in MRI scanner sequence, scanner vendor, and scan acquisition parameters (90). We refer the interested reader to comprehensive reviews on the use of radiomics in the field of radiation oncology (90-93).

Quality assurance (QA)

Intensity modulation radiation therapy (IMRT) QA is labor-intensive and ML may improve the process efficiency. ML has been used to predict IMRT QA passing rates (94), error detection in radiation plans and delivery (95,96), and error prediction in image guidance systems (97). ML also has the potential to streamline the peer review QA process, which requires meticulous attention to detail and is time consuming for clinicians. It has been used for QA of both target/normal tissue contours and the final radiation treatment plan (98-100). ML has also been leveraged experimentally to correlate real-time dose deposition in proton therapy (101).

Two successive papers led by the University of California at San Francisco speak to the prodigious potential of DL for QA. In the first (94), the group led a multi-institutional effort to validate an ML algorithm for predicting 3%/3 mm gamma passing rates in IMRT plans. As previously mentioned, ML models learn from manually curated feature inputs, which in this circumstance were 78 features purposefully selected by expert physicists. Their ML model predicted passing rates within 3.5% accuracy for 618/637 IMRT plans, illustrating the potential for automation. Subsequently (102), they pitted their ML model against a repurposed DL neural network (AlexNet) that was originally trained on unrelated data and minimally retrained to predict gamma passing rates from raw radiotherapy fluence maps by altering about 4,000 of its network parameters. Despite that the model was not instantiated to interpret fluence maps and despite that the maps contained no curated features, the reconfigured AlexNet performed as well as the physicist-crafted ML algorithm.

Adaptive treatment planning support

Adaptive radiation therapy can be described as changing the radiation treatment plan during a treatment course in response to changes in anatomy (e.g., tumor shrinkage/progression, weight loss) or tumor biology (e.g., biomarkers). ML algorithms have been developed to identify patients that may benefit from adaptive re-planning for head and neck cancer and prostate cancer (103-105). ML algorithms for online adaptive magnetic resonance guided radiotherapy have been developed for patients with gastrointestinal cancers in which a daily optimized plan is generated before each treatment based on changes in anatomy seen on the magnetic resonance imaging scan (35,106). Interested readers are referred to a detailed review on the role of machine learning in adaptive radiotherapy (107).

A report by Fu et al. (35) illustrates the advantage afforded by DL for adaptive treatment. Radiation oncologists using an MRIdian MRI-linear accelerator (ViewRay Inc. Oakwood Village, OH, USA) at Washington University in St. Louis found that manual OAR contouring during adaptive online RT was onerous and had to be done manually. Therefore, a DL convolutional neural network (CNN) was developed to automatically segment liver, kidneys, stomach, bowel, and duodenum. To improve its accuracy, two additional CNN correction networks were integrated in the DL architecture and provided feedback that helped it learn anatomic constraints. The point of these correction CNNs was to learn spatial continuity information by sampling relatively large kernels (3×3×3 voxels) and use that to improve the output CNN contours. The CNN showed excellent DSC and HD results for the liver and kidney (immobile organs) but only fair results for the stomach, bowel and duodenum (mobile, spatially variable organs). Despite this limitation, the automated contours cut manual segmentation time by 75%.

Obstacles

Although AI technologies have proven to surmount many technical obstacles, significant political, legal, and ethical considerations remain to be resolved before widespread clinical implementation occurs.

A foremost concern is that the hidden neuronal architectures that afford DL such astounding predictive power are also its principle liability: DL is a “black box” in which predictions are made without human understanding of what features the network elects to use nor the exact statistical calculus by which it elected those features. This is a challenge to ensuring patient safety and clinician acceptance. Although the use of “saliency maps” (e.g., identifying the area of a chest X-ray which most contributes to the prediction) (108) are of benefit, this issue is not satisfactorily resolved (109). Other efforts have attempted to teach DL “common sense” (110). In reality, various automated clinical decision support systems (CDSS) that accomplish specific tasks as well as physicians have existed for 40 years, but clinical adoption has met skepticism (111). Physicians are distrustful of delegating consequential diagnostic and therapeutic decisions to CDSS that they do not understand. For this reason, clinician education in AI is highly salient to AI integration in clinical workflows. Incorporation of the concepts of AI into medical education, radiation oncology residency, and continuing education may accelerate the future of AI in healthcare (112).

As previously discussed, the accuracy of ML outputs depends directly on the quality and quantity of input data. Indeed, it is usually the training data, rather than the nuances of algorithm selection or mathematical parameters, that most profoundly influences the algorithm’s generalizability and accuracy. Consider Google’s highly accurate algorithm for detecting diabetic retinopathy, which was trained on a data set of 128,175 retinal images (98). This is a significant challenge in radiation oncology, where institutions are siloed and where institutional datasets are small. The best ML and DL algorithms will likely emerge from multi-institutional cooperatives. Nevertheless, multi-institutional data sharing will require standardized datasets and stringent privacy regulations. To obviate the need for securely sending and receiving HIPPA-sensitive datasets between institutions, an approach called distributed learning has emerged, in which data from multiple institutions are remotely and securely accessed without the data ever leaving the cybersecurity confines of its own institution (113,114). Another possible approach (for DL only) is transfer learning, in which neural architectures are trained on very large datasets of unrelated data, and then retrained using a much smaller database of interest (transfer learning was exemplified in the discussion of repurposing AlexNet) (115,116). Additionally, the American College of Radiology has opened the Data Science Institute, which is hub for radiology professionals, industry leaders, government agencies, patients, and other stakeholders to collaborate and resolve obstacles to the development and implementation of AI. One of its express goals is to set standards for AI interoperability. This will help create an open source standard framework for AI use case development (117). Within radiation oncology, following a standardized nomenclature for radiotherapy planning structures ensures that data is FAIR (Findable, Accessible, Interoperable, and Reusable) (118) and easily computable. The American Association of Physicists in Medicine Task Group 263 report provides a framework for this nomenclature (119).

Finally, we echo a caution against possible unintended ethical consequences of ML, including the introduction of bias which could worsen health disparities (120). Since ML is only as good as the data we train it with—even data replete with our biases—we risk creating machines that are far more efficient and consistent at implementing our biases than we are. Furthermore, consideration must be taken to avoid introducing technologies that divide physician-patient relationships. The top-down, government-subsidized implementation of healthcare information technologies since the 2009 HITECH act has resulted in unanticipated and unintended consequences for physician burnout and patient “e-iatrogenesis” (121-124). Prudent AI use cases should be identified, and these should be modest, simple, and fluid with existing physician workflows.

Summary

We believe the literature evidence discussed above soundly supports our introductory assertion that radiotherapy treatment planning is primed to be revolutionized by AI. We have focused particularly on ML and DL AI use cases for hastening, increasing the quality, and decreasing the interobserver variability of image segmentation, treatment plan creation, QA, and patient-customized adaptive re-planning and treatment course personalization. AI has tested potential for advancing radiation oncology treatment planning, but significant challenges remain before its widespread implementation in the clinical setting.

Acknowledgments

Funding: None.

Footnote

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at http://dx.doi.org/10.21037/jmai.2019.04.02). CD Fuller is funded by 1R01CA225190-01, 1R01DE025248-01A1, and 1R01CA214825-01 NIH grants, a grant from the Nederlandse Organisatie voor Wetenschappenlijk Onde, and a grant from Elekta AB. VK Reed serves as an unpaid editorial board member of Journal of Medical Artificial Intelligence. KJK has no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Louis DN, Perry A, Reifenberger G, et al. The 2016 World Health Organization Classification of Tumors of the Central Nervous System: a summary. Acta Neuropathol 2016;131:803-20. [Crossref] [PubMed]

- Ang KK, Harris J, Wheeler R, et al. Human papillomavirus and survival of patients with oropharyngeal cancer. N Engl J Med 2010;363:24-35. [Crossref] [PubMed]

- Siegel RL, Miller KD, Jemal A. Cancer statistics, 2019. CA Cancer J Clin 2019;69:7-34. [Crossref] [PubMed]

- Aerts HJ, Velazquez ER, Leijenaar RT, et al. Decoding tumour phenotype by noninvasive imaging using a quantitative radiomics approach. Nat Commun 2014;5:4006. [Crossref] [PubMed]

- Baumann M, Krause M, Overgaard J, et al. Radiation oncology in the era of precision medicine. Nat Rev Cancer 2016;16:234-49. [Crossref] [PubMed]

- Jaffray DA. Image-guided radiotherapy: from current concept to future perspectives. Nat Rev Clin Oncol 2012;9:688-99. [Crossref] [PubMed]

- El Naqa I, Brock K, Yu Y, et al. On the fuzziness of machine learning, Neural Networks, and artificial intelligence in radiation oncology. Int J Radiat Oncol Biol Phys 2018;100:1-4. [Crossref] [PubMed]

- Thompson RF, Valdes G, Fuller CD, et al. Artificial intelligence in Radiation Oncology: A specialty-wide disruptive transformation? Radiother Oncol 2018;129:421-6. [Crossref] [PubMed]

- Thompson RF, Valdes G, Fuller CD, et al. Artificial Intelligence in Radiation Oncology Imaging. Int J Radiat Oncol Biol Phys 2018;102:1159-61. [Crossref] [PubMed]

- Thompson RF, Valdes G, Fuller CD, et al. The Future of Artificial Intelligence in Radiation Oncology. Int J Radiat Oncol Biol Phys 2018;102:247-8. [Crossref] [PubMed]

- Hussein M, Heijmen BJM, Verellen D, et al. Automation in intensity modulated radiotherapy treatment planning-a review of recent innovations. Br J Radiol 2018;91:20180270. [Crossref] [PubMed]

- Bibault JE, Giraud P, Burgun A. Big Data and machine learning in Radiation Oncology: State of the art and future prospects. Cancer Lett 2016;382:110-7. [Crossref] [PubMed]

- Kearney V, Chan JW, Valdes G, et al. The application of artificial intelligence in the IMRT planning process for head and neck cancer. Oral Oncol 2018;87:111-6. [Crossref] [PubMed]

- Kowalski R. Computational Logic and Human Thinking: How to Be Artificially Intelligent. Cambridge: Cambridge University Press, 2011.

- Naylor CD. On the Prospects for a Deep Learning Health Care System. JAMA 2018;320:1099-100. [Crossref] [PubMed]

- Hinton G. Deep learning-a technology with the potential to transform health care. JAMA 2018;320:1101-2. [Crossref] [PubMed]

- Friedman J, Tibshirani R, Hastie T. The Elements of Statistical Learning. Dordrecht: Springer, 2009.

- Krizhevsky A, Sutskever I, Hinton GE. ImageNet classification with deep convolutional Neural Networks. Advances in Neural Information Processing Systems 2012;

- LeCun Y, Bengio Y, Hinton G. Deep learning. Nature 2015;521:436-44. [Crossref] [PubMed]

- Schmidhuber J. Deep Learning in Neural Networks: An overview. Neural Netw 2015;61:85-117. [Crossref] [PubMed]

- Chen XW, Lin X. Big data deep learning: Challenges and perspectives. IEEE Access 2014;2:514-25.

- Jordan MI, Mitchell TM. Machine learning: Trends, perspectives, and prospects. Science 2015;349:255-60. [Crossref] [PubMed]

- Walker GV, Awan M, Tao R, et al. Prospective randomized double-blind study of atlas-based organ-at-risk autosegmentation-assisted radiation planning in head and neck cancer. Radiother Oncol 2014;112:321-5. [Crossref] [PubMed]

- Anders LC, Stieler F, Siebenlist K, et al. Performance of an atlas-based autosegmentation software for delineation of target volumes for radiotherapy of breast and anorectal cancer. Radiother Oncol 2012;102:68-73. [Crossref] [PubMed]

- Johnstone E, Wyatt JJ, Henry AM, et al. Systematic review of synthetic computed tomography generation methodologies for use in magnetic resonance imaging-only radiation therapy. Int J Radiat Oncol Biol Phys 2018;100:199-217. [Crossref] [PubMed]

- Ibragimov B, Xing L. Segmentation of organs-at-risks in head and neck CT images using convolutional Neural Networks. Med Phys 2017;44:547-57. [Crossref] [PubMed]

- Nikolov S, Blackwell S, Mendes R, et al. Deep learning to achieve clinically applicable segmentation of head and neck anatomy for radiotherapy. arXiv preprint 2018.

- Zhu W, Huang Y, Zeng L, et al. AnatomyNet: Deep learning for fast and fully automated whole-volume segmentation of head and neck anatomy. Med Phys 2019;46:576-89. [Crossref] [PubMed]

- Zhao L, Lu Z, Jiang J, et al. Automatic nasopharyngeal carcinoma segmentation using fully convolutional networks with auxiliary paths on dual-modality PET-CT images. J Digit Imaging 2019; [Epub ahead of print]. [Crossref] [PubMed]

- Močnik D, Ibragimov B, Xing L, et al. Segmentation of parotid glands from registered CT and MR images. Phys Med 2018;52:33-41. [Crossref] [PubMed]

- Tong N, Gou S, Yang S, et al. Fully automatic multi-organ segmentation for head and neck cancer radiotherapy using shape representation model constrained fully convolutional Neural Networks. Med Phys 2018;45:4558-67. [Crossref] [PubMed]

- Charron O, Lallement A, Jarnet D, et al. Automatic detection and segmentation of brain metastases on multimodal MR images with a deep convolutional neural network. Comput Biol Med 2018;95:43-54. [Crossref] [PubMed]

- Liu Y, Stojadinovic S, Hrycushko B, et al. A deep convolutional neural network-based automatic delineation strategy for multiple brain metastases stereotactic radiosurgery. PLoS One 2017;12:e0185844. [Crossref] [PubMed]

- Zhuge Y, Krauze AV, Ning H, et al. Brain tumor segmentation using holistically nested Neural Networks in MRI images. Med Phys 2017;44:5234-43. [Crossref] [PubMed]

- Fu Y, Mazur TR, Wu X, et al. A novel MRI segmentation method using CNN‐based correction network for MRI ‐guided adaptive radiotherapy. Med Phys 2018;45:5129-37. [Crossref] [PubMed]

- Ibragimov B, Toesca D, Chang D, et al. Combining deep learning with anatomical analysis for segmentation of the portal vein for liver SBRT planning. Phys Med Biol 2017;62:8943-58. [PubMed]

- Yang J, Veeraraghavan H, Armato SG, et al. Autosegmentation for thoracic radiation treatment planning: A grand challenge at AAPM 2017. Med Phys 2018;45:4568-81. [Crossref] [PubMed]

- Wang T, Lei Y, Tang H, et al. A learning-based automatic segmentation and quantification method on left ventricle in gated myocardial perfusion SPECT imaging: A feasibility study. J Nucl Cardiol 2019; [Epub ahead of print]. [Crossref] [PubMed]

- Trullo R, Petitjean C, Dubray B, et al. Multiorgan segmentation using distance-aware adversarial networks. J Med Imaging (Bellingham) 2019;6:014001. [Crossref] [PubMed]

- Zhong Z, Kim Y, Plichta K, et al. Simultaneous cosegmentation of tumors in PET-CT images using deep fully convolutional networks. Med Phys 2019;46:619-33. [PubMed]

- Tahmasebi N, Boulanger P, Noga M, et al. A fully convolutional deep neural network for lung tumor boundary tracking in MRI. Conf Proc IEEE Eng Med Biol Soc 2018;2018:5906-9. [PubMed]

- Dormer JD, Ma L, Halicek M, et al. Heart chamber segmentation from CT using convolutional Neural Networks. Proc SPIE Int Soc Opt Eng 2018;10578.

- Diniz JOB, Diniz PHB, Valente TLA, et al. Spinal cord detection in planning CT for radiotherapy through adaptive template matching, IMSLIC and convolutional Neural Networks. Comput Methods Programs Biomed 2019;170:53-67. [Crossref] [PubMed]

- Ha R, Chin C, Karcich J, et al. Prior to Initiation of Chemotherapy, Can We Predict Breast Tumor Response? Deep Learning Convolutional Neural Networks Approach Using a Breast MRI Tumor Dataset. J Digit Imaging 2018; [Epub ahead of print]. [Crossref] [PubMed]

- Mambou SJ, Maresova P, Krejcar O, et al. Breast Cancer Detection Using Infrared Thermal Imaging and a Deep Learning Model. Sensors (Basel) 2018; [Crossref] [PubMed]

- Men K, Zhang T, Chen X, et al. Fully automatic and robust segmentation of the clinical target volume for radiotherapy of breast cancer using big data and deep learning. Phys Med 2018;50:13-9. [Crossref] [PubMed]

- Macomber MW, Phillips M, Tarapov I, et al. Autosegmentation of prostate anatomy for radiation treatment planning using deep decision forests of radiomic features. Phys Med Biol 2018;63:235002. [Crossref] [PubMed]

- Wang B, Lei Y, Tian S, et al. Deeply supervised 3D fully convolutional networks with group dilated convolution for automatic MRI prostate segmentation. Med Phys 2019;46:1707-18. [Crossref] [PubMed]

- Balagopal A, Kazemifar S, Nguyen D, et al. Fully automated organ segmentation in male pelvic CT images. Phys Med Biol 2018;63:245015. [Crossref] [PubMed]

- Larsson R, Xiong JF, Song Y, et al. Automatic delineation of the clinical target volume in rectal cancer for radiation therapy using three-dimensional fully convolutional Neural Networks. Conf Proc IEEE Eng Med Biol Soc 2018;2018:5898-901. [PubMed]

- Men K, Dai J, Li Y. Automatic segmentation of the clinical target volume and organs at risk in the planning CT for rectal cancer using deep dilated convolutional Neural Networks. Med Phys 2017;44:6377-89. [Crossref] [PubMed]

- Lustberg T, van Soest J, Gooding M, et al. Clinical evaluation of atlas and deep learning based automatic contouring for lung cancer. Radiother Oncol 2018;126:312-7. [Crossref] [PubMed]

- Dice LR. Measures of the amount of ecologic association between species. Ecology 1945;26:297-302. [Crossref]

- Edmund JM, Nyholm T. A review of substitute CT generation for MRI-only radiation therapy. Radiat Oncol 2017;12:28. [Crossref] [PubMed]

- Zhu J, Zhang J, Qiu B, et al. Comparison of the automatic segmentation of multiple organs at risk in CT images of lung cancer between deep convolutional neural network-based and atlas-based techniques. Acta Oncol 2019;58:257-64. [Crossref] [PubMed]

- Babier A, Boutilier JJ, McNiven AL, et al. Knowledge-based automated planning for oropharyngeal cancer. Med Phys 2018;45:2875-83. [Crossref] [PubMed]

- Tol JP, Dahele M, Gregoire V, et al. Analysis of EORTC-1219-DAHANCA-29 trial plans demonstrates the potential of knowledge-based planning to provide patient-specific treatment plan quality assurance. Radiother Oncol 2019;130:75-81. [Crossref] [PubMed]

- Chatterjee A, Serban M, Abdulkarim B, et al. Performance of knowledge-based radiation therapy planning for the glioblastoma disease site. Int J Radiat Oncol Biol Phys 2017;99:1021-8. [Crossref] [PubMed]

- Nwankwo O, Mekdash H, Sihono DSK, et al. Knowledge-based radiation therapy (KBRT) treatment planning versus planning by experts: validation of a KBRT algorithm for prostate cancer treatment planning. Radiat Oncol 2015;10:111. [Crossref] [PubMed]

- Ziemer BP, Shiraishi S, Hattangadi-Gluth JA, et al. Fully automated, comprehensive knowledge-based planning for stereotactic radiosurgery: Preclinical validation through blinded physician review. Pract Radiat Oncol 2017;7:e569-e578. [Crossref] [PubMed]

- Yang Y, Ford EC, Wu B, et al. An overlap-volume-histogram based method for rectal dose prediction and automated treatment planning in the external beam prostate radiotherapy following hydrogel injection. Med Phys 2013;40:011709. [Crossref] [PubMed]

- Delaney AR, Dahele M, Tol JP, et al. Using a knowledge-based planning solution to select patients for proton therapy. Radiother Oncol 2017;124:263-70. [Crossref] [PubMed]

- Powis R, Bird A, Brennan M, et al. Clinical implementation of a knowledge based planning tool for prostate VMAT. Radiat Oncol 2017;12:81. [Crossref] [PubMed]

- Wu H, Jiang F, Yue H, et al. A dosimetric evaluation of knowledge-based VMAT planning with simultaneous integrated boosting for rectal cancer patients. J Appl Clin Med Phys 2016;17:78-85. [Crossref] [PubMed]

- Chin Snyder K, Kim J, Reding A, et al. Development and evaluation of a clinical model for lung cancer patients using stereotactic body radiotherapy (SBRT) within a knowledge-based algorithm for treatment planning. J Appl Clin Med Phys 2016;17:263-75. [Crossref] [PubMed]

- Wang J, Hu W, Yang Z, et al. Is it possible for knowledge-based planning to improve intensity modulated radiation therapy plan quality for planners with different planning experiences in left-sided breast cancer patients? Radiat Oncol 2017;12:85. [Crossref] [PubMed]

- Tol JP, Dahele M, Delaney AR, et al. Can knowledge-based DVH predictions be used for automated, individualized quality assurance of radiotherapy treatment plans? Radiat Oncol 2015;10:234. [Crossref] [PubMed]

- Hazell I, Bzdusek K, Kumar P, et al. Automatic planning of head and neck treatment plans. J Appl Clin Med Phys 2016;17:272-82. [Crossref] [PubMed]

- Yuan L, Ge Y, Lee WR, et al. Quantitative analysis of the factors which affect the interpatient organ-at-risk dose sparing variation in IMRT plans. Med Phys 2012;39:6868-78. [Crossref] [PubMed]

- Liu H, Xing L. Isodose feature-preserving voxelization (IFPV) for radiation therapy treatment planning. Med Phys 2018;45:3321-9. [Crossref] [PubMed]

- Wall PDH, Carver RL, Fontenot JD. Impact of database quality in knowledge-based treatment planning for prostate cancer. Pract Radiat Oncol 2018;8:437-44. [Crossref] [PubMed]

- Cardenas CE, McCarroll RE, Court LE, et al. Deep learning algorithm for auto-delineation of high-risk oropharyngeal clinical target volumes with built-in dice similarity coefficient parameter optimization function. Int J Radiat Oncol Biol Phys 2018;101:468-78. [Crossref] [PubMed]

- Fan J, Wang J, Chen Z, et al. Automatic treatment planning based on three-dimensional dose distribution predicted from deep learning technique. Med Phys 2019;46:370-81. [Crossref] [PubMed]

- Chen X, Men K, Li Y, et al. A feasibility study on an automated method to generate patient-specific dose distributions for radiotherapy using deep learning. Med Phys 2019;46:56-64. [Crossref] [PubMed]

- Kajikawa T, Kadoya N, Ito K, et al. Automated prediction of dosimetric eligibility of patients with prostate cancer undergoing intensity-modulated radiation therapy using a convolutional neural network. Radiol Phys Technol 2018;11:320-7. [Crossref] [PubMed]

- Hricak H. 2016 new horizons lecture: Beyond imaging-radiology of tomorrow. Radiology 2018;286:764-75. [Crossref] [PubMed]

- Larue RTHM, Klaassen R, Jochems A, et al. Pre-treatment CT radiomics to predict 3-year overall survival following chemoradiotherapy of esophageal cancer. Acta Oncologica 2018;57:1475-81. [Crossref] [PubMed]

- Starkov P, Aguilera TA, Golden DI, et al. The use of texture-based radiomics CT analysis to predict outcomes in early-stage non-small cell lung cancer treated with stereotactic ablative radiotherapy. The British Journal of Radiology 2019;92:20180228. [Crossref] [PubMed]

- Lopez CJ, Nagornaya N, Parra NA, et al. Association of radiomics and metabolic tumor volumes in radiation treatment of glioblastoma multiforme. Int J Radiat Oncol Biol Phys 2017;97:586-95. [Crossref] [PubMed]

- Zhang B, Tian J, Dong D, et al. Radiomics features of multiparametric MRI as novel prognostic factors in advanced nasopharyngeal carcinoma. Clinical Cancer Research 2017;23:4259-69. [Crossref] [PubMed]

- Lafata KJ, Hong JC, Geng R, et al. Association of pre-treatment radiomic features with lung cancer recurrence following stereotactic body radiation therapy. Phys Med Biol 2019;64:025007. [Crossref] [PubMed]

- Giannini V, Mazzetti S, Bertotto I, et al. Predicting locally advanced rectal cancer response to neoadjuvant therapy with 18F-FDG PET and MRI radiomics features. Eur J Nucl Med Mol Imaging 2019;46:878-88. [Crossref] [PubMed]

- Sun W, Jiang M, Dang J, et al. Effect of machine learning methods on predicting NSCLC overall survival time based on Radiomics analysis. Radiat Oncol 2018;13:197. [Crossref] [PubMed]

- Rossi L, Bijman R, Schillemans W, et al. Texture analysis of 3D dose distributions for predictive modelling of toxicity rates in radiotherapy. Radiother Oncol 2018;129:548-53. [Crossref] [PubMed]

- van Dijk LV, Thor M, Steenbakkers RJHM, et al. Parotid gland fat related Magnetic Resonance image biomarkers improve prediction of late radiation-induced xerostomia. Radiother Oncol 2018;128:459-66. [Crossref] [PubMed]

- Gabryś HS, Buettner F, Sterzing F, et al. Design and selection of machine learning methods using radiomics and dosiomics for normal tissue complication probability modeling of xerostomia. Front Oncol. 2018;8:35. [Crossref] [PubMed]

- Abdollahi H, Mahdavi SR, Mofid B, et al. Rectal wall MRI radiomics in prostate cancer patients: Prediction of and correlation with early rectal toxicity. Int J Radiat Biol 2018;94:829-37. [Crossref] [PubMed]

- Krafft SP, Rao A, Stingo F, et al. The utility of quantitative CT radiomics features for improved prediction of radiation pneumonitis. Med Phys 2018;45:5317-24. [Crossref] [PubMed]

- Pota M, Scalco E, Sanguineti G, et al. Early prediction of radiotherapy-induced parotid shrinkage and toxicity based on CT radiomics and fuzzy classification. Artif Intell Med 2017;81:41-53. [Crossref] [PubMed]

- Jethanandani A, Lin TA, Volpe S, et al. Exploring applications of radiomics in magnetic resonance imaging of head and neck cancer: A systematic review. Front Oncol 2018;8:131. [Crossref] [PubMed]

- Arimura H, Soufi M, Kamezawa H, et al. Radiomics with artificial intelligence for precision medicine in radiation therapy. J Radiat Res 2019;60:150-7. [Crossref] [PubMed]

- El Naqa I, Pandey G, Aerts H, et al. Radiation therapy outcomes models in the era of radiomics and radiogenomics: Uncertainties and validation. Int J Radiat Oncol Biol Phys 2018;102:1070-3. [Crossref] [PubMed]

- Nie K, Al-Hallaq H, Li XA, et al. NCTN assessment on current applications of radiomics in oncology. Int J Radiat Oncol Biol Phys 2019; [Epub ahead of print]. [Crossref] [PubMed]

- Valdes G, Chan MF, Lim SB, et al. IMRT QA using machine learning: A multi-institutional validation. J Appl Clin Med Phys 2017;18:279-84. [Crossref] [PubMed]

- Kalet AM, Gennari JH, Ford EC, et al. Bayesian network models for error detection in radiotherapy plans. Phys Med Biol 2015;60:2735-49. [Crossref] [PubMed]

- Nyflot MJ, Thammasorn P, Wootton LS, et al. Deep learning for patient‐specific quality assurance: Identifying errors in radiotherapy delivery by radiomic analysis of gamma images with convolutional neural networks. Med Phys 2019;46:456-64. [PubMed]

- Valdes G, Morin O, Valenciaga Y, et al. Use of TrueBeam developer mode for imaging QA. J Appl Clin Med Phys 2015;16:322-33. [Crossref] [PubMed]

- Gulshan V, Peng L, Coram M, et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA 2016;316:2402-10. [Crossref] [PubMed]

- Brown WE, Sung K, Aleman DM, et al. Guided undersampling classification for automated radiation therapy quality assurance of prostate cancer treatment. Med Phys 2018;45:1306-16. [Crossref] [PubMed]

- McIntosh C, Svistoun I, Purdie TG. Groupwise conditional random forests for automatic shape classification and contour quality assessment in radiotherapy planning. IEEE Trans Med Imaging 2013;32:1043-57. [Crossref] [PubMed]

- Gueth P, Dauvergne D, Freud N, et al. Machine learning-based patient specific prompt-gamma dose monitoring in proton therapy. Phys Med Biol 2013;58:4563-77. [Crossref] [PubMed]

- Interian Y, Rideout V, Kearney VP, et al. Deep nets vs expert designed features in Med Phys: An IMRT QA case study. Med Phys 2018;45:2672-80. [Crossref] [PubMed]

- Guidi G, Maffei N, Vecchi C, et al. A support vector machine tool for adaptive tomotherapy treatments: Prediction of head and neck patients criticalities. Physica Medica 2015;31:442-51. [Crossref] [PubMed]

- Guidi G, Maffei N, Meduri B, et al. A machine learning tool for re-planning and adaptive RT: A multicenter cohort investigation. Phys Med 2016;32:1659-66. [Crossref] [PubMed]

- Guidi G, Maffei N, Vecchi C, et al. Expert system classifier for adaptive radiation therapy in prostate cancer. Australas Phys Eng Sci Med 2017;40:337-48. [Crossref] [PubMed]

- Liang F, Qian P, Su KH, et al. Abdominal, multi-organ, auto-contouring method for online adaptive magnetic resonance guided radiotherapy: An intelligent, multi-level fusion approach. Artif Intell Med 2018;90:34-41. [Crossref] [PubMed]

- Tseng HH, Luo Y, Ten Haken RK, et al. The role of machine learning in knowledge-based response-adapted radiotherapy. Front Oncol 2018;8:266. [Crossref] [PubMed]

- Rajpurkar P, Irvin J, Zhu K, et al. CheXNet: Radiologist-Level Pneumonia Detection on Chest X-Rays with Deep Learning. arXiv 2017.

- Challen R, Denny J, Pitt M, et al. Artificial intelligence, bias and clinical safety. BMJ Qual Saf 2019;28:231-7. [Crossref] [PubMed]

- Knight W. An AI is playing Pictionary to figure out how the world works. MIT Technology Review. Available online: https://www.technologyreview.com/s/612882/an-ai-is-playing-pictionary-to-figure-out-how-the-world-works/

- Shortliffe EH, Sepulveda MJ. Clinical decision support in the era of artificial intelligence. JAMA 2018;320:2199-200. [Crossref] [PubMed]

- AMA passes first policy recommendations on augmented intelligence. American Medical Association. Available online: https://www.ama-assn.org/press-center/press-releases/ama-passes-first-policy-recommendations-augmented-intelligence

- Jochems A, Deist TM, El Naqa I, et al. Developing and validating a survival prediction model for NSCLC patients through distributed learning across 3 countries. Int J Radiat Oncol Biol Phys 2017;99:344-52. [Crossref] [PubMed]

- Jochems A, Deist TM, van Soest J, et al. Distributed learning: Developing a predictive model based on data from multiple hospitals without data leaving the hospital – A real life proof of concept. Radiother Oncol 2016;121:459-67. [Crossref] [PubMed]

- Samala RK, Chan H-P, Hadjiiski LM, et al. Multi-task transfer learning deep convolutional neural network: Application to computer-aided diagnosis of breast cancer on mammograms. Phys Med Biol 2017;62:8894-908. [PubMed]

- Byra M, Styczynski G, Szmigielski C, et al. Transfer learning with deep convolutional neural network for liver steatosis assessment in ultrasound images. Int J Comput Assist Radiol Surg 2018;13:1895-903. [Crossref] [PubMed]

- American College of Radiology Data Science Institute Strategic Plan. Reston, VA: Data Science Institute of the American College of Radiology, 2017. Available online: https://www.acrdsi.org/-/media/DSI/Files/Strategic-Plan.pdf?la=en

- Traverso A, van Soest J, Wee L, et al. The radiation oncology ontology (ROO): Publishing linked data in radiation oncology using semantic web and ontology techniques. Med Phys 2018;45:e854-e862. [Crossref] [PubMed]

- AAPM Task Group. Standardizing Nomenclatures in Radiation Oncology. The Report of AAPM Task Group 263, January 2018.

- Khullar D. A.I. Could Worsen Health Disparities. The New York Times. January 31, 2019.

- Ash JS, Berg M, Coiera E. Some unintended consequences of information technology in health care: The nature of patient care information system-related errors. J Am Med Inform Assoc 2004;11:104-12. [Crossref] [PubMed]

- Weiner JP, Kfuri T, Chan K, et al. "e-Iatrogenesis": the most critical unintended consequence of CPOE and other HIT. J Am Med Inform Assoc 2007;14:387-8; discussion 389. [Crossref] [PubMed]

- Fred HL, Scheid MS. Physician burnout: Causes, consequences, and (?) cures. Tex Heart Inst J 2018;45:198-202. [Crossref] [PubMed]

- McDonald CJ, Callaghan FM, Weissman A, et al. Use of internist's free time by ambulatory care Electronic Medical Record systems. JAMA Intern Med 2014;174:1860-3. [Crossref] [PubMed]

Cite this article as: Kiser KJ, Fuller CD, Reed VK. Artificial intelligence in radiation oncology treatment planning: a brief overview. J Med Artif Intell 2019;2:9.